Superteams.ai Digest: AI in Retail: Impact and Case Studies

In this edition of Superteams.ai monthly AI digest, we take a look at the impact of AI on retail and the latest trends in the open-source AI world.

Key Takeaways:

- Deep-dive into the impact of AI on retail.

- Learn more about Phi-3.5-MoE, the newest member of the Phi model family.

- Get a glimpse of xAI’s Groq2 and Groq2 mini models.

- Catch up on NVIDIA’s Mistral-NeMo-Minitron 8B.

- Top trending blogs and tutorials.

- Cutting-edge research on visual language models, hand-tracking tech, and more.

- Plus, stay updated with the latest happenings at Superteams.ai.

Highlights

A Quick Recap of the Top AI Trends of the Month

Phi-3.5-MoE: The newest member of Microsoft’s Phi model family.

- Expert Composition: Contains 16 experts, each with 3.8 billion parameters.

- Total Model Size: 42 billion parameters, with 6.6 billion parameters activated using two experts.

- Performance: Outperforms dense models of a similar size in both quality and performance.

- Language Support: Capable of supporting over 20 languages.

- Safety Strategy: Utilizes a robust post-training process combining Supervised Fine-Tuning (SFT) and Direct Preference Optimization (DPO).

- Datasets: Mixes open-source and proprietary synthetic instruction and preference datasets, with a focus on helpfulness, harmlessness, and multiple safety categories.

- Context Length: Supports a context length of up to 128K, suitable for numerous long-context tasks.

xAI introduces Grok-2 and Grok-2 Mini models.

- Image Generation: Powered by Flux.1, enabling advanced image generation capabilities.

- Performance: Grok-2 ranks third in the LMSYS chatbot arena and excels in Elo score against other leading models.

- Model Details: Specifics like model size and context length are not yet disclosed.

- Availability: Models are available to X Premium users, with an enterprise API release anticipated later this month.

NVIDIA introduces Mistral-NeMo-Minitron 8B

- Width-pruned version of the Mistral NeMo 12B model.

- Fine-Tuning: The original 12B model was fine-tuned with 127B tokens to address distribution shifts.

- Pruning Process:some text

- MLP intermediate dimension reduced from 14,336 to 11,520.

- Hidden size reduced from 5,120 to 4,096.

- Attention heads and layers retained.

- Distillation Parameters: Used a peak learning rate of 1e-4, cosine decay, and 380B tokens.

- Performance: Outperforms state-of-the-art models of similar size.

- Framework Integration: Planned gradual implementation in NVIDIA NeMo for generative AI.

Sneak Peek into Some Top Trending Blogs from August

Streamline Property Data Management: Advanced Data Extraction and Retrieval with Indexify

The blog explores how Indexify enhances real-time data extraction and retrieval, with a focus on its application in the real estate industry. It details the creation of a knowledge base, scaling techniques for large datasets, and methods for storing results in a structured database for future analysis. The article highlights Indexify's role in enabling deeper, efficient data analysis.

Building a Smart Chatbot Using Qdrant Cloud and LangChain for Customer Support Tickets

The blog details the creation of a data extraction pipeline using the Qdrant vector database and OpenAI for embeddings, focusing on efficient information retrieval and metadata filtering. It culminates in building a custom chatbot designed to answer customer support queries, showcasing the integration of these technologies. The article provides a comprehensive guide to leveraging vector databases in chatbot development.

Harnessing the Power of Corrective RAG (CRAG): Building High-Precision Recommendation Systems with Qdrant & Llama 3

The tutorial guides users through building an advanced recommendation system using Corrective RAG (CRAG), enhanced by Qdrant and Llama3 for precise retrieval and generation. It covers data preparation, system setup, and evaluation with Ragas, highlighting CRAG's role in improving recommendation accuracy. The blog concludes with insights on system performance and potential enhancements.

Building a RAG Pipeline on Excel: Harnessing Qdrant and Open-Source LLMs for Stock Trading Data

This blog dives into building a dynamic Retrieval Augmented Generation (RAG) pipeline centered around Excel data, specifically using a stock trading dataset. It walks you through extracting key metrics like position count, price distribution, and aggregate holding quantity, all while transforming and storing the data in a Qdrant database. By the end, you'll see how this powerful RAG system delivers precise, insightful responses based on the stored trading information.

What’s New in AI Research?

A glance at latest AI research

CogVLM2: Visual Language Models for Image and Video Understanding

Meet the CogVLM2 family—where advanced visual-language fusion meets state-of-the-art image and video understanding. The CogVLM series introduces models that understand and generate content from both images and videos, expanding the potential of large language models (LLMs) into new areas like document analysis, GUI comprehension, and temporal video grounding.

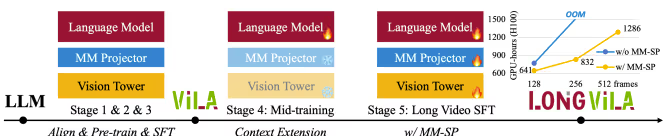

LONGVILA: SCALING LONG-CONTEXT VISUAL LANGUAGE MODELS FOR LONG VIDEOS

NVIDIA's LongVILA redefines long-context visual-language models, pushing the boundaries of video understanding. By extending the model's capacity from 8 to 1,024 frames, LongVILA achieves groundbreaking results in tasks like video QA and captioning. With innovations like the Multi-Modal Sequence Parallelism (MM-SP) system, it scales context length up to 2 million tokens and delivers performance up to 5.7 times faster than existing methods. Ready to dive into how this could revolutionize video analysis?

Constraining Dense Hand Surface Tracking with Elasticity

Discover how Meta researchers are revolutionizing hand-tracking technology! Their groundbreaking algorithm tackles the challenge of tracking hands through complex gestures, self-contact, and occlusion - something previously thought impossible. This innovation could transform everything from VR interactions to sign language recognition, opening up exciting new possibilities in human-computer interaction.

Sapiens: Foundation for Human Vision Model

Another Meta research introduces "Sapiens," a groundbreaking model family designed for key human-centric vision tasks like 2D pose estimation, depth estimation, and more. With scalable performance across various benchmarks, Sapiens excels in generalizing to real-world data, even with limited labeled information. Leveraging large-scale pretraining on human-specific datasets, these models are poised to become foundational in human vision applications

What's New at Superteams.ai

Latest Blog Highlights

The Ethical Landscape of AI Content

In this blog, we delve into how AI is transforming the content creation landscape—acting both as a powerful tool for creators and a source of new challenges, particularly around bias and authenticity. We explore practical approaches for implementing ethical AI, including AI guardrails, and examine its global impact and implications.

FLUX.1: A Deep Dive

In this blog, we explore the impressive capabilities of the FLUX.1 model by Black Forest Labs, highlighting its unique approach through the innovative Flow Matching technique and the DIT (Discrete Integrate and Transfer) architecture.

INDUSTRY SPOTLIGHT

AI Tech Trends in Retail

Trend#1. Multimodal AI:

What are Multimodal AI models?

Multimodal AI models are machine learning models that understand, interpret, and generate information from multiple types of sources. These sources could be text, images, audio, and even video to generate more accurate predictions and insights.

Just as humans use multiple senses like sight and hearing to recognize people or objects, multimodal deep learning focuses on developing similar capabilities in computers, enabling them to process and integrate information from different types of data.

Applications in Retail

- Marketing and advertising: Retailers are using these AI models to create content across various formats, including text, images, video, and 3D assets, enabling them to produce captivating visuals for marketing with minimum prompts.

- Personalized Shopping Experiences: Retailers use AI to deliver customized shopping experiences, such as try-on product images or in-situ visualizations, enhancing customer engagement.

- Product Description Generation: Generative AI automatically creates detailed product descriptions, improving SEO with intelligent meta-tagging.

Trend#2: YOLO

What is YOLO?

“YOU only look once” or YOLO is an object detection algorithm that recently gained popularity for its speed and accuracy in identifying fast-moving objects like cars, animals and its utility for real-world applications. Since its inception, it has been repurposed into various versions. In this edition, we will discuss one of its latest versions, YOLOv8.

YOLOv8 combines deep learning and computer vision to bring out precise object detection and classification. This feature makes it ideal for retail space to maximize their operational efficiency.

Stand-out features for Retail:

- Speed and accuracy: YOLOv8 excels at detecting objects in real-time, allowing retailers to respond quickly to environmental changes.

- Flexibility: The model can be customized to meet specific retail needs, enabling precise analysis of visual data.

- Scalability: YOLOv8's architecture facilitates the efficient processing of large retail data.

- Seamless integration: It is Open-Source and can be easily incorporated into existing retail analytics systems, enhancing their capabilities without major disruptions.

Applications in Retail

- Inventory Management: YOLOv8 helps retailers optimize their inventory by accurately monitoring products on shelves, preventing stockouts, and ensuring popular items are always available.

- Customer Behavior Analysis: The model tracks customer movements and interactions with products, providing insights into shopping patterns and preferences. This information helps retailers optimize store layouts and product placements.

- Loss Prevention: YOLOv8 aids in quickly identifying security risks and anomalies, enhancing loss prevention efforts.

- Operational Efficiency: By analyzing customer footfall and identifying bottlenecks, YOLOv8 helps improve overall store functionality

Trend#3: Predictive analytics

What is Predictive analytics?

Predictive analytics employs sophisticated techniques like deep learning, natural language processing, and computer vision to extract insights from structured and unstructured data sources. It can handle various data types, including numerical, categorical, text, and time series data, making it versatile for retail applications.

Stand-out features for Retail

- Data-driven forecasting: Predictive analytics leverages vast amounts of data to make accurate predictions about future trends and customer behaviors.

- Real-time insights: It offers up-to-date information, allowing retailers to respond quickly to changing market conditions.

- Personalization capabilities: The technology enables tailored marketing and product recommendations based on individual customer preferences.

- Risk mitigation: By identifying potential issues before they occur, predictive analytics helps retailers minimize losses and optimize operations.

Applications in Retail

- Demand Forecasting: Predictive analytics helps retailers anticipate future demand for products, enabling them to optimize inventory levels and reduce waste.

- Price Optimization: The technology analyzes market trends, competitor pricing, and customer behavior to determine optimal pricing strategies that maximize profits while maintaining competitiveness.

- Customer Segmentation: Predictive analytics categorizes customers based on their behavior, preferences, and purchase history, allowing for more targeted marketing campaigns.

- Fraud Detection: By analyzing transaction patterns, predictive analytics can identify potential fraudulent activities, helping retailers protect their assets and customers.

Case Study: Unilever's Success with AI Integration in Retail

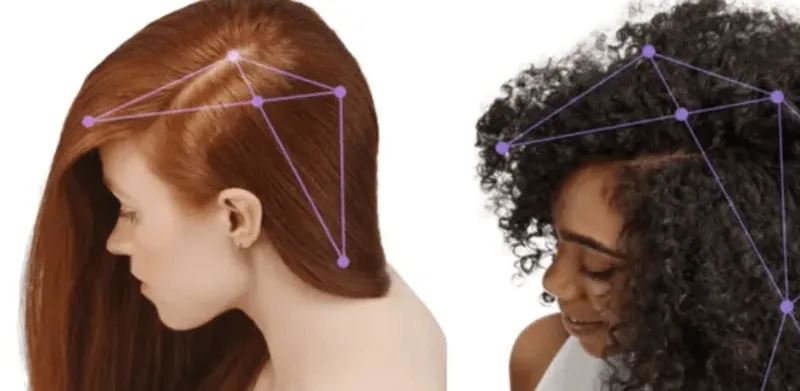

Unilever has successfully leveraged AI and computer vision with their BeautyHub PRO tool, an AI-powered selfie analyzer that offers personalized skincare and haircare recommendations. By analyzing up to 30 visual data points from selfies, BeautyHub PRO offers tailored product suggestions across Unilever's top brands, transforming how customers shop and engage.

The results have been quite impressive. According to Unilever’s survey, users of BeautyHub PRO are filling their carts with 39% more products and completing 43% more purchases. In 2023, this game-changing tool captivated over 80,000 users and reached over 3 million people. The data-driven insights from BeautyHub PRO are also turbocharging Unilever's marketing efforts, leading to a 23% boost in ad recall and a 7% rise in purchase intent across Southeast Asia.

Spotlight on Retail Innovation:

Home Depot’s AI-Driven Efficiency

Here’s a look at how Home Depot is revolutionizing in-store operations with a blend of computer vision and machine learning through their ML-powered app, "SideKick."

- Product Location: The app uses computer vision to help staff find items in hard-to-reach areas, such as overhead shelves, ensuring customers get what they need faster.

- Product Availability Tracking: Staff capture images using the app to monitor which products are available in the store, helping maintain stock levels efficiently.

- Task Prioritization: A cloud-enabled ML algorithm determines which tasks are actionable and when they should be completed, ensuring staff focus on the most important tasks.

- Restocking Efficiency: Machine vision detects out-of-stock items and assists in their restocking, improving overall inventory management.

- Task Alerts: A common tasking engine alerts associates about the most urgent tasks, helping them prioritize their work effectively.

- Dashboard Visibility: The app features a dashboard for associates and managers, highlighting where and how tasks should be completed.

- Platform Integration: Integration with other platforms ensures that all data and task prioritization are up-to-date and aligned with Home Depot's broader business goals.

Nike’s AI-Powered Design Revolution

- Generative AI for Product Design: In 2024, Nike unveiled its Athlete Imagined Revolution (A.I.R.) Project designed to revolutionize product creation by blending cutting-edge AI with traditional design processes.

- The project harnesses generative AI, large language models (LLMs), 3D printing, and computational design to push the boundaries of athletic wear innovation to create innovative, athlete-driven designs.

About Superteams.ai: Superteams.ai matches modern businesses with an exclusive network of vetted, high-quality, fractional AI researchers and developers, and a suite of open-source Generative AI technologies, to deliver impact at scale.