Superteams.ai Digest: AI for Carbon Accounting

In this edition of Superteams.ai monthly AI Digest, we take a look how AI can transform carbon emissions tracking and reporting and the latest buzz in the open-source AI world.

Industry Spotlight

AI for Carbon Accounting

Companies can use carbon accounting to minimize risk, build brand equity, and reduce inefficiency. According to a 2021 survey from Boston Consulting Group, businesses estimate an average error rate of 30-40% in their emissions calculations. This is the accuracy gap: the delta between the emissions an organization thinks they’re producing and the emissions they’re actually producing. This accuracy gap is a business liability – and comprehensive, accurate carbon accounting, a risk mitigation necessity.

In steps artificial intelligence. AI can have a transformative impact in reducing carbon emissions through predictive analytics, enhanced data accuracy, real-time emission tracking, and identification of emission hotspots across global supply chains. AI has the potential to cut global greenhouse gas (GHG) emissions by 4%. How can AI accomplish this?

- Automated Matching of Emission Factors

Accurate carbon accounting hinges on finding the correct emission factors, a complex task due to the diversity of activities and the challenge of matching them to the appropriate factors from extensive databases. New software solutions are emerging that automate the matching of emission factors, providing customized suggestions and simplifying the process. - AI-Powered Data Parsing

AI can help businesses save time by extracting activity data from unstructured documents, such as PDF invoices, streamlining data processing. - Optical Character Recognition (OCR)

OCR technology supports carbon accounting by converting physical documents into digital, machine-readable formats. This accelerates data entry and minimizes manual errors. - Generative AI for Reporting

Generative AI facilitates carbon reporting by automating data analysis and report generation. By processing large datasets from various sources, it creates detailed data models and tailors reports to meet industry-specific needs. - Anomaly Detection and Carbon Reduction Recommendations

AI can quickly detect anomalies in data, signaling potential errors or opportunities for emission reduction. In addition to ensuring data accuracy, AI offers actionable recommendations for reducing carbon emissions.

According to the 2024 Decarbonization Report, companies committed to climate action are experiencing $200 million in annual net gains. By adopting advanced practices like AI integration and product-level carbon tracking, businesses could unlock up to 4.5 times more value.

Highlights

A Quick Recap of the Top AI Trends of the Month

Llama 3.2 includes a range of lightweight and multimodal models

Llama 3.2 is the latest iteration in Meta's AI lineup bringing powerful enhancements across performance, size, and usability across different devices. Below are the key details regarding the models available in this release:

- Small and medium-sized vision LLMs: 11B parameters and 90B parameters

- Lightweight, text-only models: 1B parameters and 3B parameters

Performance:

The 11B and 90B models:

- The 11B and 90B models excel in image reasoning tasks, such as document understanding, image captioning, and visual grounding.

- They are competitive with top models like Claude 3 Haiku and GPT4o-mini in image recognition and visual understanding tasks.

The 1B and 3B models:

- The 1B and 3B models demonstrate strong capabilities in multilingual text generation and tool calling.

- They outperform smaller models like Gemma 2 2.6B and Phi 3.5-mini in tasks such as instruction following and summarization.

Context Length:

- The models support an impressive context length of up to 128K tokens, allowing for extensive input processing without sacrificing performance.

Use Cases

11B & 90B Models:

- Ideal for complex tasks involving both text and images, such as analyzing sales data from graphs or interpreting maps.

The 1B and 3B models:

- Perfect for on-device applications that prioritize privacy and efficiency, enabling developers to create personalized applications that handle sensitive data locally without cloud reliance.

Black Forest Labs announces Flux 1.1:

The launch of FLUX 1.1 [pro] marks a significant advancement in image-generative technology. Here are the key details regarding this release:

- Enhanced Speed: FLUX 1.1 [pro] delivers six times faster generation than its predecessor, significantly improving workflow efficiency.

- Improved Image Quality: The model enhances image quality, prompt adherence, and diversity while maintaining the same output as before but at twice the speed.

- Performance Benchmarking: Under the codename "blueberry," FLUX 1.1 [pro] has surpassed all competitors in the Artificial Analysis image arena, achieving the highest overall Elo score.

- Upcoming Ultra High-Resolution Generation: The model is set up for fast ultra high-resolution generation, allowing users to create images up to 2k resolution without losing prompt fidelity.

The Launch of Pixtral 12B by Mistral AI

- Technical Specifications:

- Model Type: Natively multimodal, trained with interleaved image and text data.

- Architecture:

- Vision Encoder: A new 400M parameter vision encoder trained from scratch.

- Decoder: A 12B parameter multimodal decoder based on Mistral Nemo.

- Variable Image Support: Capable of processing images of various sizes and aspect ratios.

- Context Length: Supports a long context window of 128K tokens, allowing multiple images to be processed simultaneously.

- Performance:

- Multimodal Tasks: Excels in instruction following and achieves a score of 52.5% on the MMMU reasoning benchmark, outperforming many larger models.

- Text-Only Benchmarks: Maintains state-of-the-art performance on text-only tasks, ensuring no compromise in capabilities.

- Instruction Following: Outperforms other open-source models (like Qwen2-VL 7B and LLaVa-OneVision 7B) by a relative improvement of 20% in text instruction following benchmarks.

- Use Cases:

- Document Understanding: Effective in tasks such as chart and figure understanding, document question answering, and multimodal reasoning.

- Image Processing Flexibility: Can ingest images at their natural resolution, providing users with flexibility in token usage for image processing.

The Release of YOLO11 by Ultralytics

YOLO11 marks another milestone in the Ultralytics YOLO series, offering state-of-the-art performance for real-time object detection and other computer vision tasks.

- Key Features

- Architecture: YOLO11 features an enhanced backbone and neck architecture for improved feature extraction.

- Model Variants: Includes multiple models such as YOLO11n, YOLO11s, YOLO11m, YOLO11l, and YOLO11x, each optimized for different performance levels.

- Parameter Efficiency and speed: The YOLO11m model achieves higher mean Average Precision (mAP) on the COCO dataset with 22% fewer parameters than its predecessor, YOLOv8m.

- Deployment Flexibility: Can be deployed across edge devices, cloud platforms, and systems with NVIDIA GPUs, making it suitable for a wide range of environments.

- Versatility: Supports various tasks including object detection, instance segmentation, pose estimation, and oriented object detection.

Sneak Peek into Some Top Trending Blogs from September

Building Agentic Code RAG Using CodeLlama and Qdrant

Learn how to create an AI-driven code analysis tool leveraging Code Llama and Qdrant. This innovative system enables loading, parsing, and analyzing code, providing intelligent suggestions for improvement. Discover the technical implementation details and understand its potential to enhance code review efficiency and boost developer productivity.

Mastering Fantasy Sports Strategy with Multistage AI: Unlocking the Power of LangChain and Qdrant

Explore how LangChain and Qdrant integrate to transform fantasy sports strategies, leveraging predictive analytics and real-time optimization for informed decision-making.

Building Multimodal AI in Healthcare Using GPT and Qdrant

Learn how GPT and Qdrant's Multimodal AI technology is transforming radiology, enabling seamless analysis of medical reports and images to drive productivity and precision patient care. Check out the latest tech blog for details.

What’s New in AI Research?

MSI-Agent: Incorporating Multi-Scale Insight into Embodied Agents for Superior Planning and Decision-Making

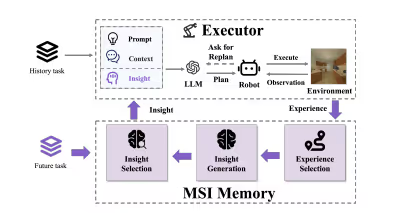

This paper by Stanford University researchers introduces the MultiScale Insight Agent (MSI-Agent)—an innovative solution designed to revolutionize decision-making in large language models (LLMs). MSI tackles the common issues of irrelevant and limited insights through a powerful three-step pipeline: experience selector, insight generator, and insight selector. By effectively summarizing and scaling insights, MSI generates both task-specific and high-level insights, all stored in a comprehensive database for smarter, more informed decision-making.

ROBIN3D : IMPROVING 3D LARGE LANGUAGE MODEL VIA ROBUST INSTRUCTION TUNING

The Robin3D model is setting a new benchmark for 3D AI agents by advancing the field of 3D Large Language Models (3DLLMs). With its groundbreaking Robust Instruction Generation (RIG) engine, Robin3D is trained on an expansive dataset of 1 million instruction samples, including 344K adversarial and 508K diverse instructions, enhancing its ability to follow instructions in complex 3D environments. Equipped with cutting-edge modules like the Relation-Augmented Projector and ID-Feature Bonding, Robin3D excels in challenging spatial tasks, achieving impressive gains—7.8% in grounding and 6.9% in captioning—all without task-specific fine-tuning.

Molmo and PixMo: Open Weights and Open Data for State-of-the-Art Multimodal Models

The Molmo family of multimodal vision-language models (VLMs) is shaking up the open-source world by delivering cutting-edge performance without the need for proprietary data. Developed by the Allen Institute for AI and University of Washington, Molmo taps into its unique PixMo dataset, built entirely from human speech-based descriptions, ensuring rich, detailed image captions. Paired with diverse fine-tuning datasets, including in-the-wild Q&A and 2D pointing data, Molmo offers an exceptional user interaction experience. The flagship 72B model not only outperforms other open-weight models but also competes head-to-head with proprietary giants like GPT-4 and Claude 3.5.

What's New at Superteams.ai

Latest Blog Highlights

How to Implement Visual Recognition with Multimodal Llama 3.2: A Step-by-Step Guide

In this blog, we show you a step-by-step coding tutorial on deploying Multimodal Llama 3.2 for visual recognition tasks like generating creative product descriptions.

A Guide to Invoice Parsing and Analysis Using Pixtral-12B Model for OCR and RAG

In this tutorial, we’ll walk you through the steps of deploying Pixtral-12B, a multimodal model that excels in parsing invoices with ease. Trained on a diverse range of image and text data, this open model is a powerhouse for automating your document workflows.

A Guide to Incorporating Multimodal AI into Your Business Workflow

In this blog, we explore how multimodal models can transform your workflows. Imagine using vision-powered assistants to boost your team’s efficiency or automating product catalog updates to keep everything consistent. You can even extract key details from unstructured data, like invoices, without any manual work. Plus, with AI analysts, generating detailed reports from documents and spreadsheets becomes effortless.

About Superteams.ai: Superteams.ai matches modern businesses with an exclusive network of vetted, high-quality, fractional AI researchers and developers, and a suite of open-source Generative AI technologies, to deliver impact at scale.